NiFi and Kafta are Data Engineering tools used to create data pipelines. NiFi Kafta both comes from Apache. In this article, we get deep dive into it.

So let start by first defining the difference between NiFi and Kafta.

What is NiFi

NiFi was built to automated and managed the flow of information between systems. It solves the problem of automating sharing data between one computer to another, this problem space has been around ever since enterprises had more than one system. The problems and solution patterns that emerged have been discussed and articulated extensively.

In simple terms, NiFi is a data flow tool that was meant to fill the role of batch scripts, at the ever-increasing scale of big data. Rather than maintain and watch scripts as environments change, NiFi was made to allow end-users to maintain flows, easily add new targets and sources of data, and do all of these tasks with full data provenance and replay capability the whole time.

What is Kafka

Apache Kafka is a distributed data store optimized for ingesting and processing streaming data in real-time. Streaming data is data that is continuously generated by thousands of data sources, which typically send the data records in simultaneously. A streaming platform needs to handle this constant influx of data sequentially.

Put simply, apache Kafka is a community distributed event streaming platform capable of handling trillions of events a day. Initially conceived as a messaging queue, Kafka is based on an abstraction of a distributed commit log. Since being created and open-sourced by LinkedIn in 2011, Kafka has quickly evolved from messaging queue to a full-fledged event streaming platform.

Founded by the original developers of Apache Kafka, Confluent delivers the most complete distribution of Kafka with Confluent Platform. Confluent Platform improves Kafka with additional community and commercial features designed to enhance the streaming experience of both operators and developers in production, at a massive scale.

NiFi vs Kafka

| NiFi | Kafka |

|---|---|

| Provides dataflow solution | Provides durable stream store |

| Centralized management, from edge to core | Decentralized management of producers & consumers |

| Great traceability, event-level data provenance starting when data is born | Distributed data durability |

| Real-time operational visibility | Low latency |

Integrating NiFi and Kafka

This section of the blog is just a modified version of the post “Integrating Apache NiFi and Apache Kafka” by Bryan Bende.

Due to NiFi’s isolated classloading capability, NiFi can support multiple versions of the Kafka client in a single NiFi instance. The Apache NiFi 1.0.0 release contains the following Kafka processors:

- GetKafka & PutKafka using the 0.8 client

- ConsumeKafka & PublishKafka using the 0.9 client

- ConsumeKafka_0_10 & PublishKafka_0_10 using the 0.10 client

Which processor to use depends on the version of the Kafka broker that you are communicating with since Kafka does not necessarily provide backward compatibility between versions.

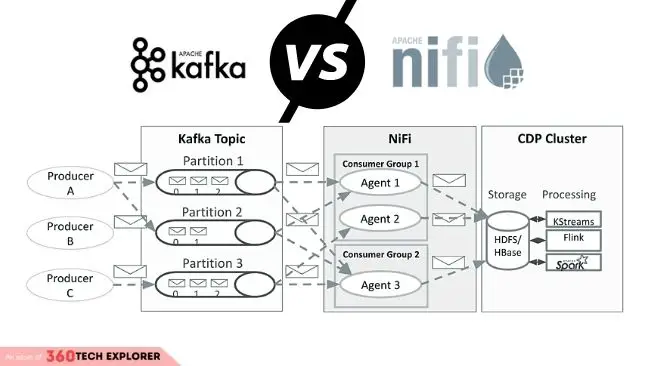

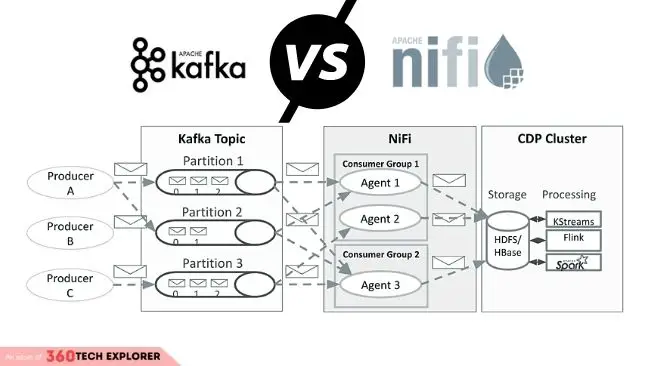

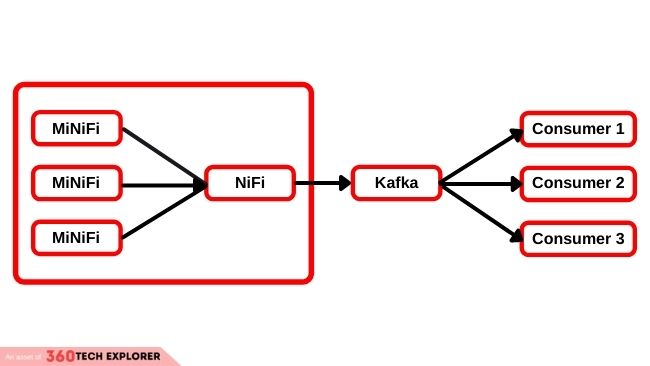

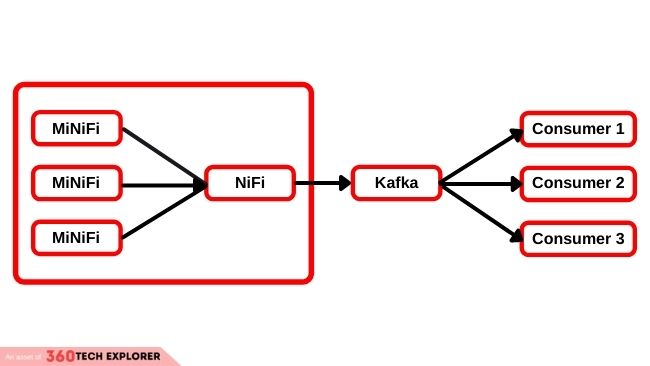

NiFi as a Producer

A common scenario is for NiFi to act as a Kafka producer. With the advent of the Apache MiNiFi sub-project, MiNiFi can bring data from sources directly to a central NiFi instance, which can then deliver data to the appropriate Kafka topic. The major benefit here is being able to bring data to Kafka without writing any code, by simply dragging and dropping a series of processors in NiFi, and being able to visually monitor and control this pipeline.

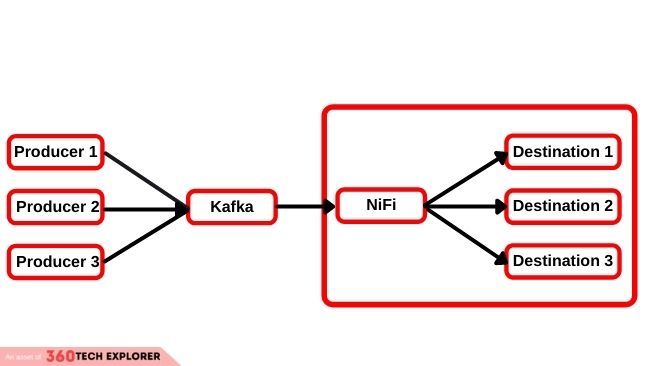

NiFi as a Consumer

In some scenarios, an organization may already have an existing pipeline bringing data to Kafka. In this case, NiFi can take on the role of a consumer and handle all of the logic for taking data from Kafka to wherever it needs to go. The same benefit as above applies here. For example, you could deliver data from Kafka to HDFS without writing any code and could make use of NiFi’s MergeContent processor to take messages coming from Kafka and batch them together into appropriately sized files for HDFS.

Bi-Directional Data Flows

A more complex scenario could involve combining the power of NiFi, Kafka, and a stream processing platform to create a dynamic self-adjusting data flow. In this case, MiNiFi and NiFi bring data to Kafka which makes it available to a stream processing platform, or other analytic platforms, with the results being written back to a different Kafka topic where NiFi is consuming from, and the results being pushed back to MiNiFi to adjust collection.

An additional benefit in this scenario is that if we need to do something else with the results, NiFi can deliver this data wherever it needs to go without having to deploy new code.

PublishKafka

PublishKafka acts as a Kafka producer and will distribute data to a Kafka topic based on the number of partitions and the configured partitioner, the default behavior is to round-robin messages between partitions. Each instance of PublishKafka has one or more concurrent tasks executing (i.e. threads), and each of those tasks publishes messages independently.

ConsumeKafka

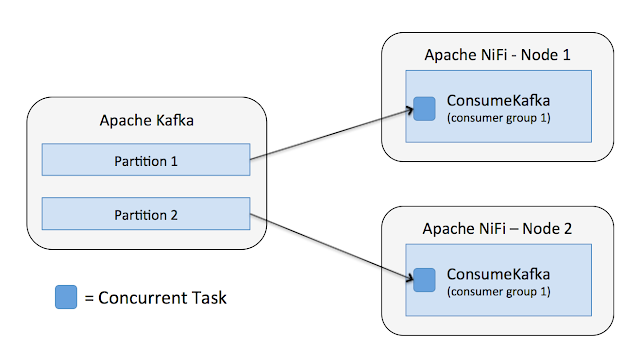

On the consumer side, it is important to understand that Kafka’s client assigns each partition to a specific consumer thread, such that no two consumer threads in the same consumer group will consume from the same partition at the same time. This means that NiFi will get the best performance when the partitions of a topic can be evenly assigned to the concurrent tasks executing the ConsumeKafka processor.

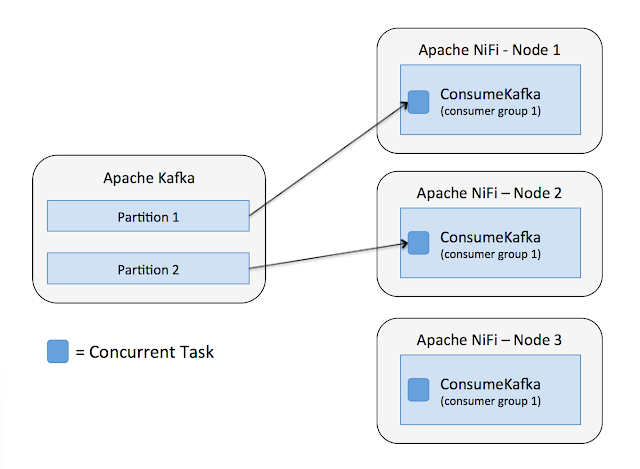

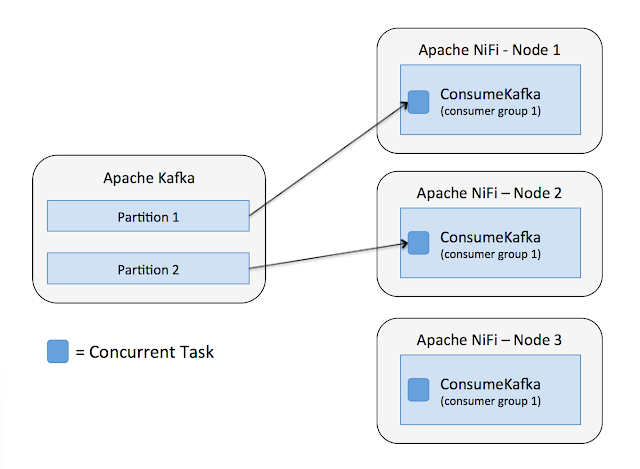

Let us say we have a topic with two partitions and a NiFi cluster with two nodes, each running a ConsumeKafka processor for the given topic. By default each ConsumeKafka has one concurrent task, so each task will consume from a separate partition as shown below.

Now let us say we still have one concurrent task for each ConsumeKafka processor, but the number of nodes in our NiFi cluster is greater than the number of partitions in the topic. We would end up with one of the nodes not consuming any data as shown below.

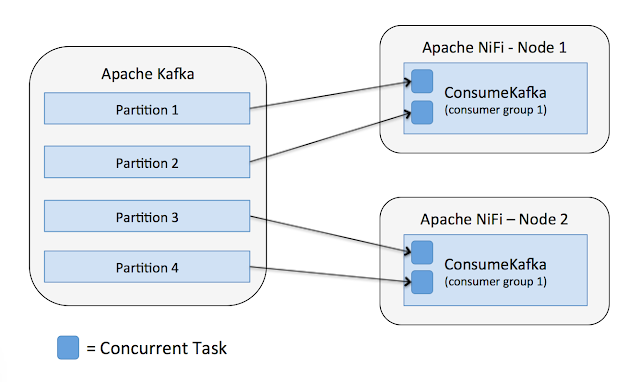

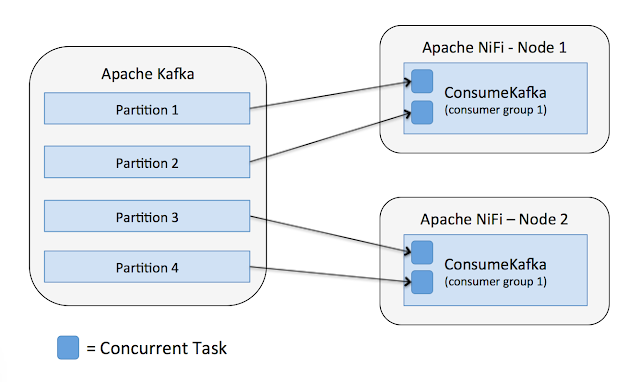

If we have more partitions than nodes/tasks, then each task will consume multiple partitions. In this case, with four partitions and a two-node NiFi cluster with one concurrent task for each ConsumeKafa, each task would consume from two partitions as shown below.

Now if we have two concurrent tasks for each processor, then the number of tasks lines up with the number of partitions, and we get each task consuming from one partition.

If we had increased the concurrent tasks, but only had two partitions, then some of the tasks would not consume any data. Note, there is no guarantee which of the four tasks would consume data in this case, it is possible it would be two tasks on the same node, and one node not doing anything.

The take-away here is to think about the number of partitions vs. the number of consumer threads in NiFi and adjust as necessary to create the appropriate balance.

Conclusion

Both NiFi and Kafka are amazing, you should definitely learn more about them.

If you have any questions regarding this blog post, feel free to leave a comment.